Introduction to Searching and Sorting

CSC 385 - Data Structures and Algorithms

University of Illinois Springfield

College of Health, Science, and Technology

Comparing Objects

Comparing Objects

- The main operation in searching and sorting algorithms is the comparison operation

- If the elements are primitives then the use of standard comparison operators can be use:

<, <=, ==, >=, >. - But if the elements are not primitive, they are objects, how can we compare them?

- Using

==for instance, does not compare the contents of objects

- Using

Object Equality Example

- We would like the following code to print

true - Try it yourself!

How to Fix

- Every class created in Java implicitly derives base class Object

- We need to override the

equalsmethod of the Object class - Then, instead of

==we need to call the method

//Put method inside Rectangle Class

@Override

public boolean equals(Object other) {

if(other == null) {return false;}

if(!(other instanceof Rectangle)) {return false;}

if(other == this) {return true;}

Rectangle otherRect = (Rectangle) other;

return this.width == otherRect.width &&

this.height == otherRect.height;

}

...

//Change r1 == r2 to the following

r1.equals(r2);Comparing Objects Continued

- While

equalshandles==for objects, what about<or>? - We want to have a solution so our algorithms for searching and sorting are generic and can handle any collection of objects as long as they are comparable

- The solution is to implement the Comparable Interface

Java Comparable Interface

- The comparable interface has one method,

compareTo - This method must be implemented by every class implements the interface

- if

xandyare two objects and we dox.compareTo(y)then the possible outputs become

Comparable Interface x.compareTo(y)

| Result | Meaning |

|---|---|

| negative integer | x < y |

| zero | x = y |

| positive integer | x > y |

Implementing Comparable Interface

- Using the Rectangle class previously, let us implement the Comparable interface

- Start by adding

implements Comparable<Rectangle>to the end of the class header

- Then implement the

compareTomethod making sure we follow the rules of the results from above

Comparable Rectangles

public class Rectangle implements Comaprable<Rectangle>{

//instance variables and constructor

@Override

public int compareTo(Rectangle other) {

int area = this.width * this.height;

int otherArea = other.width * other.height;

//if negative then this < other

//if positive then this > other

//if 0 then this = other

return area - otherArea;

}

}Linear Search

Linear Search

- Linear (A.K.A Sequential) Search

- Starts at one end of the array and proceeds to the other end

- If it finds the target element then it returns

- If it reaches the end then element does not exist

- Depending on what question we are asking we will either return an index or a boolean

- If the question is “Where is the target element” then an index is returned or -1 for does not exist

- Usually, only the first occurrence index is returned

- Often the method is called

indexOf

- If the question is “Does the element exist in the array” then a boolean is returned

- Often this method is called

contains

- Often this method is called

Linear Search as IndexOf

- This search returns an index

//Linear Search Implementation

public int indexOf(Object array[], Object targetValue) {

for(int i = 0; i < array.length; i++) {

if(array[i].equals(targetValue)) {

return i;

}

}

//Element does not exist

return -1;

}- This code should be very familiar as anytime you iterated through an array you have been doing a linear search

Linear Search Finding Element

Linear Search Element DNE

Linear Search as Contains

Recursive Linear Search as IndexOf

//Linear Search Implementation

public int indexOf(Object array[], Object targetElement) {

return indexOf(array, targetElement, 0);

}

public int indexOf(Object array[], Object targetElement, int index) {

if(index >= array.length) {

return -1;

}

if(array[index].equals(targetElement)) {

return index;

}

return indexOf(array, targetElement, index + 1);

}A Quick Aside About Recursive Linear Search

- Use a for-loop or while-loop with Java as the recursive version is inefficient resource-wise

- Why see the recursive version?

- Some languages don’t have for loops or while loops.

- Examples: LISP, Haskell, Erlang

Time Efficiency of the Linear Search

- It is conventional to measure time efficiency of searching and sorting in terms of number of comparisons and not total number statement executions

- This means how many times does the statement in the

ifis executed.

Time Efficiency of the Linear Search

- Best Case: The search target is the first item we look at. This means the comparison only happens once which is constant time \(O(1)\)

- Worst Case: The search target is either the last item looked at or not in the array. This means the comparison is dependent upon the size of the array since each element must be looked at. Each element is only investigated once so \(O(n)\).

- Average Case: On average, only half the array will need to be looked at. This means we need to investigate \(\frac{n}{2}\) elements which is \(O(\frac{n}{2})\) which is just \(O(n)\) in disguise.

Binary Search

Binary Search

- Assumption: Collection is Sorted

- Consequence

- If sorted in increasing order, then \(a_i \leq a_{i+1}\)

- If sorted in decreasing order, then \(a_i \geq a_{i+1}\)

- If we compare the middle element to the target then depending on whether the target is greater than or less than the middle element we can eliminate the need to search half the array

A Representation of Binary Search

Binary Search Explanation

- Assumption: Sorted in increasing order

- If the data is sorted we can look at the middle item

- If the target is less than the middle item then we know that target is in the lower half of the collection

- If the target is greater than the middle item then we know that the target is in the upper half of the array

- We can ignore the halves of the array by using additional int values to represent the high index and the low index and moving them accordingly

Binary Search Visualization

Binary Search as Index Of

//Binary Search Implementation

public int indexOf(Comparable array[], Comparable target) {

int low = 0;

int high = array.length - 1;

int mid;

while(low <= high) {

mid = low + (high - low) / 2;

int result = target.compareTo(array[mid]);

if(result < 0) {// target < array[mid]

high = mid - 1; //ignore upper half of subarray

} else if(result > 0) { //target > array[mid]

low = mid + 1; //ignore lower half of array

} else { //target == array[mid]

return mid;

}

}

//target does not exist in array

return -1;

}Binary Search Iterative Version Notes

- Notice the

low + (high - low) / 2- This is preferred over the simpler

(low + high) / 2because this simpler version can result in integer overflow for large enough arrays.

- This is preferred over the simpler

- The result of the

compareTomethod is stored in anintand this is what is compared to determine if target is less than, greater than, or equal to the element in the middle of the subarray - The

mid - 1andmid + 1are used to ignore those values that were already checked

Recursive Binary Search

//Binary Search Implementation

public int indexOf(Comparable array[], Comparable target) {

return binarySearch(array, target, 0, array.length - 1);

}

private int binarySearch(Comparable array[], Comparable target, int low, int high) {

if(low > high) {

return -1;

}

int mid = low + (high - low) / 2;

int result = target.compareTo(array[mid]);

if(result < 0) {

return binarySearch(array, target, low, mid - 1);

} else if(result > 0) {

return binarySearch(array, target, mid + 1, high);

} else {

return mid;

}

}Binary Search Recursive Version Notes

- Most of the logic is the same

- One obvious change is the removal of the while loop

- The next obvious change is now to change

lowandhighwe change them in the recursive calls

Time Efficiency

- Best Case: Just like linear search, if it is the first value we look at then we have constant time checks \(O(1)\)

- Worst Case: Like linear search, if the item is not in the collection then we maximize our time. Unlike the linear search, however, the runtime is \(O(log n)\). More on that in the next slide.

- Average Case: Similar to linear search, it is somewhere in the middle, unlike linear search, we still have \(O(log n)\)

Why Log N

- First, at each iteration of the search we are reducing the size of the array by half

- We can set up the following inequality

\[ \frac{n}{2^i} \geq 1 \]

- Here, \(n\) is the size of the array and \(i\) is the i’th iteration

- Now just solve for \(i\) since we need to know how many iterations we need to make

\[ \begin{align*} \frac{n}{2^i} &\geq 1 \\ n &\geq 2^i \\ log_2(n) &\geq i \end{align*} \]

Recurrence Relation

- Recurrence Relation

\[ \begin{align*} T(1) &= 1 \\ T(n) &= T(\frac{n}{2}) + 1 \end{align*} \]

Expand Recurrence Relation

Suppose \(n = 16\)

\[ \begin{align*} T(16) &= T(\frac{n}{2}) + 1 = T(8) + 1 \\ T(8) &= T(\frac{n}{2}) + 1 = T(4) + 1 \\ T(4) &= T(\frac{n}{2}) + 1 = T(2) + 1 \\ T(2) &= T(\frac{n}{2}) + 1 = T(1) + 1 \\ T(1) &= 1 \end{align*} \]

\[ \begin{align*} T(1) &= 1 \\ T(2) &= T(1) + 1 = 1 + 1 = 2 \\ T(4) &= T(2) + 1 = 2 + 1 = 3 \\ T(8) &= T(4) + 1 = 3 + 1 = 4 \\ T(16) &= T(8) + 1 = 4 + 1 = 5 \end{align*} \]

We actually end up with \(T(n) = log_2(n) + 1\) which is still \(O(log n)\)

Inductive Proof

Guess: \(T(n) = log_2(n) + 1\)

Base Case \[ T(1) = log_2(1) + 1 = 0 + 1 = 1 ✓ \]

Inductive case

First, we will assume the input size is a power of 2

- \(n = 2^k\)

- This keeps things simple

Inductive Proof Continued

- Next, you might think our goal is \(T(2^{k+1}) = log_2(2^{k+1}) + 1\)

- But let us investigate for a second

- If our input is a power of 2 and our guess is \(log(n) + 1\) then when we put \(n = 2^k\) into \(log_2(n) + 1\) we get a nice solution

\[ log_2(2^k) + 1 = k + 1 \]

- So our goal is actually \(T(2^{k + 1}) = k + 2\)

Inductive Proof Continued

- Guess: \(T(2^k) = log_2(2^k) + 1\)

- Goal: \(T(2^{k + 1}) = k + 2\)

\[ \begin{align*} T(2^{k + 1}) &= T(\frac{2^{k + 1}}{2}) + 1 \\ &= T(2^k) + 1 \\ &= log_2(2^k) + 2 \\ &= k + 2 ✓ \end{align*} \]

Final Notes About Efficiency

- We took a nice road by assuming input size is a power of 2

- We could have used another well studied theorem called the Master Theorem

- Not needed for this course but for those interested

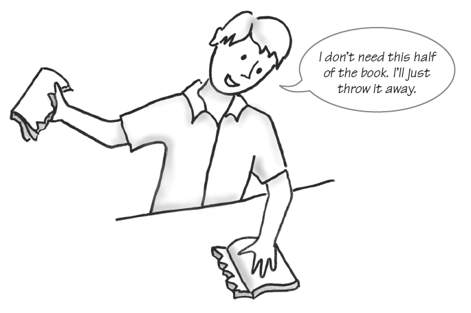

Final Notes on Binary Search

- The binary search can be easily modified for arrays sorted in descending order

- The binary search DOES NOT guarantee it will find the first occurrence

- If the entire collection being searched can be contained in memory then the binary search will always perform better than linear search unless the collection is trivially small

- There is another requirement that the data is stored in contiguous memory in order for the binary search to perform better than the linear search

Choosing a Search Method

- Linear Search

- Works on both sorted and unsorted collections

- Suitable for smaller collections

- Objects must have a properly implemented

equalsmethod

- Binary Search

- Works only on sorted collections

- Suitable for larger collections

- Objects must implement the

Comparableinterface or have some method to indicate order - Entire collection must be contiguous and stored in memory

A Lesson Learned

- Tom Scott explains the importance of picking the right search method

Efficiency Table

| Best Case | Average Case | Worst Case | |

|---|---|---|---|

| Linear Search (unsorted data) | \(O(1)\) | \(O(n)\) | \(O(n)\) |

| Linear Search (sorted data) | \(O(1)\) | \(O(n)\) | \(O(n)\) |

| Binary Search (sorted data) | \(O(1)\) | \(O(log n)\) | \(O(log n)\) |

Sorting

Sorting

- What is sorting?

- The process of arranging things by some order

- For the lecture, when talking about sorting, we will use ascending order

- Ascending order is defined as follows

- Given a collection of elements \(a_1, a_2, a_3, \dots, a_{n - 1}, a_n\) the following holds true

- \(a_i \leq a_{i + 1}\)

- This assumes \(i \neq n\) since that is the last value.

- Given a collection of elements \(a_1, a_2, a_3, \dots, a_{n - 1}, a_n\) the following holds true

- Descending order is similar except \(a_i \geq a_{i + 1}\)

Data Restriction

- In order for a sorting algorithm to work, data must be comparable or have an ordering

- This largely means Objects must implement the Comparable interface

- When writing the header of the method you can do

public static void sort(Comparable a[])which is similar to the search - Better would be to use generics but we will save that syntax for later

- Generics in Java can be blessing and a curse

Considerations

- When picking a sorting algorithm you should consider

- Running Time

- We are always interested in minimizing the running time

- Running time is measured in terms of Numer of comparisons + number of times the collection must be traversed

- Memory Requirement

- Some collections can be sorted entirely in memory

- However, if we are sorting a large amount of data, we might need to store portions of the collection externally

- Performing disk access is more expensive than writing and reading from memory and can affect runtime

- Running Time

Considerations Continued

- Need to also know whether you should use a stable or unstable sort

- Stable vs. Unstable

- A stable sort preserves the order of the duplicate items in the collection

- An unstable sort does not guarantee this

- Stable sort may become important when sorting base on multiple attributes

- Example: consider sorting a deck of cards. You would first want to sort based on value then suit

- If you used an unstable sort for both then when you sort by suit you might not maintain the ordering of the values within their respective suit

Stable vs. Unstable Example

- Collection: \(d, b_1, a, b_2, c\)

- Here we have two \(b\)’s and they are denoted as \(b_1\) for first \(b\) and \(b_2\) for the second \(b\)

Stable Sorts

- Collection: \(d, b_1, a, b_2, c\)

- Stable sort: \(a, b_1, b_2, c, d\)

- \(b_1\) and \(b_2\) are guaranteed to be in this ordering

Unstable Sort

- Collection: \(d, b_1, a, b_2, c\)

- Unstable sort:

- Possible result 1: \(a, b_1, b_2, c, d\)

- Possible result 2: \(a, b_2, b_1, c, d\)

- Here, \(b_1\) and \(b_2\) are not guarantted to be in their relative ordering

Warning

- If you are not careful, it is possible to turn an algorithmically stable sort into one that is unstable in its implementation!

Lecture Focus

- Your focus in this lecture should be on understanding how the sorting algorithms work and analyze their time efficiency

- Do not get hung up on and intimidated by the source code!

- Try to understand how it works but do not memorize the code.

- You should also be able to understand the running time of an algorithm by looking at its pseudocode and determine the number of traversals and comparisons

- There are many ways of sorting and organizing data. We are only looking at a small subset of the different sorting algorithms that have been created for both fun and actual use.

Selection Sort

Selection Sort

- Algorithm

- Going from left to right, find the smallest item and swap it with the first item. Then start again at the second position, find the smallest item and swap it with the element at the second position. Continue until you reach the last element of the collection which should be already in its sorted position.

Selection Sort Pseudocode

selection sort algorithm(collection) {

for i in 0 to length(collection) - 1 {

smallest = i

for j in (i + 1) to length(collection) - 1 {

if collection[j] < collection[smallest] {

smallest = j

}

}

swap(collection, i, smallest)

}

}Runtime Efficiency

- The algorithm uses two nested loops

- The inner loop depends on the outer loops incrementer

- This tells us the Worst Case is \(O(n^2)\)

- What about Best Case?

- Well, we can reason that the inner loop will go from i to the end of the collection for each iteration of the outer loop

- This is indicative of \(O(n^2)\) behavior

Selection Sort Practice Question

- Show each step of the selection sort using the following array:

[14, 5, 4, 9, 7, 13, 2]

Selection Sort Answer

Find the first smallest element, 2, and swap it with the element at index 0:

[2, 5, 4, 9, 7, 13, 14]

Find the second smallest, 4, and swap it with the element at index 1:

[2, 4, 5, 9, 7, 13, 14]

Find the third smallest, 5, and swap it with the element at index 2:

[2, 4, 5, 9, 7, 13, 14]

Answer Continued

Find the fourth smallest, 7, and swap it with the element at index 3:

[2, 4, 5, 7, 9, 13, 14]

Find the fifth smallest, 9, and swap it with the element at index 4:

[2, 4, 5, 7, 9, 13, 14]

Find the sixth smallest, 13, and swap it with the element at index 5:

[2, 4, 5, 7, 9, 13, 14]\(\leftarrow\) done

Selection Sort Stability

- Is the selection sort stable or unstable?

- Since swaps are happening in the middle of the array, this sort is unstable

- Example

| Iteration | List |

|---|---|

| Initial List | \(7_1, 9, 7_2, 3_1, 3_2\) |

| 1 | \(3_1, 9, 7_2, 7_1, 3_2\) |

| 2 | \(3_1, 3_2, 7_2, 7_1, 9\) |

- After the first iteration, \(7_1\) now comes after \(7_2\)

Insertion Sort

Insertion Sort

- Algorithm

- Start at the position immediately after the first

- So index 1 instead of index 0

- Loop from right to left starting at the position immediately before current item

- Shift items right as long as they are greater than the currently selected element

- Once an item is less than currently selected element or index 0 is reached, insert the currently selected item at the position reached.

- Do this for the remaining items

- Start at the position immediately after the first

Insertion Sort Pseudocode

insertion sort algorithm(collection) {

//1 <= i < length

for i in 1 to length(collection) - 1 {

current = collection[i]

//0 <= j < i

for j in (i - 1) to 0 {

if current < collection[j] {

collection[j+1] = collection[j]

} else {

break;

}

}

collection[j+1] = current;

}

}Insertion Sort Practice Question

- Show the steps for sorting the following array using the insertion sort

[14, 5, 4, 9, 7, 13, 2]

Insertion Sort Answer

- 5 swaps places with 14

[14, 5, 4, 9, 7, 13, 2] \(\rightarrow\) [5, 14, 4, 9, 7, 13, 2]

- 4 swaps places with 14 and 5

[5, 14, 4, 9, 7, 13, 2] \(\rightarrow\) [4, 5, 14, 9, 7, 13, 2]

- 9 swaps places with 14

[4, 5, 14, 9, 7, 13, 2] \(\rightarrow\) [4, 5, 9, 14, 7, 13, 2]

Answer Continued

- 7 swaps places with 14 and 9

[4, 5, 9, 14, 7, 13, 2] \(\rightarrow\) [4, 5, 7, 9, 14, 13, 2]

- 13 swaps places with 14

[4, 5, 7, 9, 14, 13, 2] \(\rightarrow\) [4, 5, 7, 9, 13, 14, 2]

- 2 swaps places with 14, 13, 9, 7, 5, and 4

[4, 5, 7, 9, 13, 14, 2] \(\rightarrow\) [2, 4, 5, 7, 9, 13, 14]

Answer Continued

Total number of comparisons: 16

5 < 14 - 1 comparison

4 < 14, 4 < 5 - 2 comparisons

9 < 14, 9 < 5 - 2 comparisons

7 < 14, 7 < 9, 7 < 5 - 3 comparisons

13 < 14, 13 < 9, 2 comparisons

2 < 14, 2 < 13, 2 < 9, 2 < 7, 2 < 5, 2 < 4 - 6 comparisons

Time Efficiency

- Worst Case: We see the inner for-loop relies on the outer for-loops variable so \(O(n^2)\)

- Best Case: Not so clear because we can prematurely exit out of the inner loop. Imagine the array is already sorted. The inner loop would only perform 1 comparison per element. This indicates a \(O(n)\) runtime.

- Average Case: Still \(O(n^2)\), however, if the data is partially sorted this algorithm does less work

- Selection sort is always \(O(n^2)\) regardless of the initial order of the data.

Insertion Sort Stability

- Because no swaps in the middle of the collection is occurring, this sort is stable

- However, you can easily make the insertion sort unstable by accident by changing the inequality from

if current < collection[j]

to

if current <= collection[j]

Shell Sort

Shell Sort

- Shell sort is an improvement of the insertion sort proposed by Donald Shell

- The insertion sort is simple, often useful, but

- inefficient for larger collections

- Entries move only to adjacent locations so many items need to be shifted in order to place an item into its sorted position

- Shell sort moves entries beyond adjacent locations

- The key is to sort subarrays in which the items in each subarray are spaced evenly apart (for example, every 5th item)

- Gradually decrease the gap between the elements in the subarray until eventually the gap becomes 1

Properties of Increment Sequence

- The gradual decrease in the size of the gap is defined by an increment sequence

- The sequence must be a sequence of integers sorted in ascending order

- The first integer in the sequence must be 1

- All values in the sequence are unique

- Example of valid increment sequence \(\{1, 3, 5, 7\}\)

- Shell suggested that the initial gap between all indices be \(\frac{n}{2}\) where n is the size of the collection and then halve the value at each pass until it is one

Shell Sort k=6 Example

Shell Sort k=3 Example

Visualize The Sort

Pseudocode

shell sort algorithm(collection) {

for k in length(collection) / 2 to 1 by k = k / 2{

for i in 0 to k {

incremental insertion sort(collection, i, k)

}

}

}increment insertion sort(collection, start, inc) {

for i in start+inc to length(collection) by i += inc {

for j in i to inc by j -= inc {

if collection[j] < collection[j - inc] {

swap(collection, j, j - inc)

}

}

}

}Analysis of Shell Sort

- The shell sort algorithm calls insertion sort repeatedly, so why do we say that it performs better? Because,

- The initial sorts are on subarrays that are much smaller than the original one.

- The later sorts are on arrays that are partially sorted and the final sort is on an array that is almost entirely sorted. As we know the more sorted the array the less work is required for insertion sort.

- In the worst case the shell sort is \(O(n^2)\)

- In the average case the shell sort is \(O(n^{1.5})\)

Stability of Shell Sort

- The shell sort, due to the swapping of elements in the middle of the collection, is unstable

- This is different from the insertion sort which was stable

Improvements to Shell Sort

- If we tweak the size of the gap, we can make the algorithm even more efficient:

- One improvement is to avoid even sized gaps.

- Even gaps causes repeated comparisons in several passes

- To avoid even gaps, just add 1 to a gap when it is even

- By avoiding even gaps we improve the worst case from \(O(n^2)\) to \(O(n^{1.5})\)

Sorting Efficiency Overview

| Best Case | Average Case | Worst Case | |

|---|---|---|---|

| Selection sort | \(O(n^2)\) | \(O(n^2)\) | \(O(n^2)\) |

| Insertion Sort | \(O(n)\) | \(O(n^2)\) | \(O(n^2)\) |

| Shell Sort | \(O(n)\) | \(O(n^{1.5})\) | \(O(n^2)\) or \(O(n^{1.5})\) |

Comparator Interface

Comparator Interface

- Suppose we have objects that are either not comparable or have an implementation of a comparable interface that isn’t what we want for sorting

- We can implement our own comparing code that does what we want, however, we have to accept an additional argument to any algorithm which we want to allow a different comparison to occur

- This is where the Comparator Interface comes in

- Comparator interface must be imported from the

java.utilpackage

Problem

- We have the following code

class PokerCard implements Comparable<PokerCard>{

public final Suit suit;

public final Value value;

//constructor

public int compareTo(PokerCard other) {

return compare(this.value, other.value);

}

//private compare method

}- We see that the PokerCard class compares the values of the cards

- We would like to instead compare the suits

- We can make our own Comparator to do so

PokerCard Comparator

public class PokerCardSuitComparator implements Comparator<PokerCard> {

@Override

public int compare(PokerCard pc1, PokerCard pc2) {

//compare suits instead

}

}- The Comparator interface requires

comparemethod to be implemented - This method should follow the same convention as the

compareTomethod in theComparableinterface - Only difference is any class that implements a comparator is separate from the class that we would like to implement this for

Comparator Expected Results

| Result | Meaning |

|---|---|

| Negative Number | \(pc1 < pc2\) |

| Zero | \(pc1 = pc2\) |

| Positive Number | \(pc1 > pc2\) |

Using a Comparator

- Because implementing a comparator requires us to create a new class, we must create an instance of that class to use it

- We can then pass in the instance to any method that accepts Comparator objects

- That method will then use the compare method of the Compartor object to do comparisons similar to any Comparable.

Using a Comparator Sample Code

public static <T> void sort(T arry[], Comparator<T> comparator) {

//sort algorithm

//will perform comparator.compare(obj1, obj2) to organize data

}

public static void main(String args[]) {

PokerCardSuitComparator pcsc = new PokerCardSuitComparator();

PokerCard deck[] = new PokerCard[];

//create random deck

sort(deck, pcsc);

}Note

Again, we will deal with generics later. The <T> and T syntax above

EOF Reached

CSC 385 - Data Structures and Algorithms